40%

Reduction in infrastructure costs

2x

More often deployment

20k+

Database requests per day

100GB+

Data processed each day

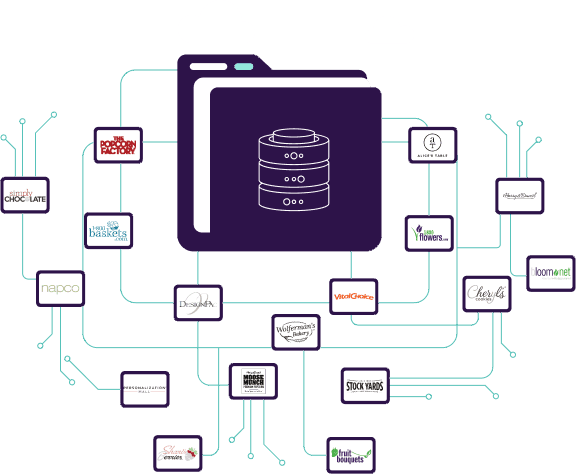

About our client

With а 36-year history, 17 brands and more than 2 billion in annual sales, our client is the leading US retailer in the gift industry.

Country

USA (New York)

Technology

Java, JavaScript, Kotlin

Cooperation

2021 - Present time

Why is Softkit an excellent tech partner for US-based companies? Find the answer below

The goal - to bring all product data to one place

Our client has 17 subsidiaries operating under their own brands. Each has a separate system for tracking product information. The goal was to create a unifying space where stakeholders could access information about every product.

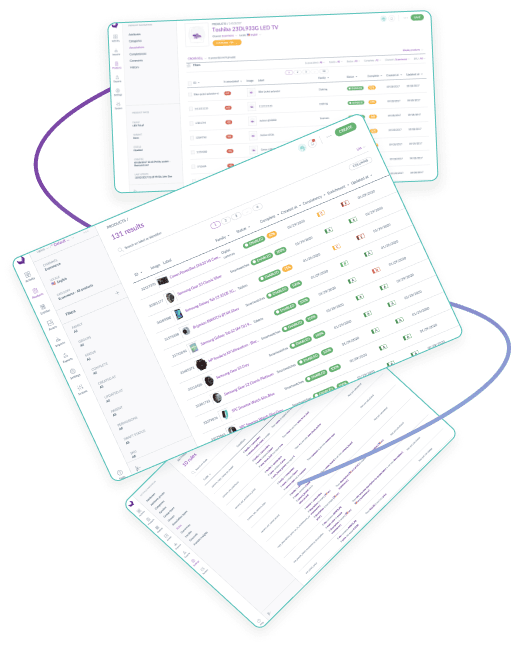

Akeneo – the new data destination

A product information management system called Akeneo was chosen as a new destination for all the subsidiary product data.

Currently, data is duplicated to Akeneo. In the future, product information management systems used by subsidiaries will no longer be required.

In Akeneo, stakeholders can find every product detail, like whether it was sold or discounted, what types are available, which stores sell it, etc.

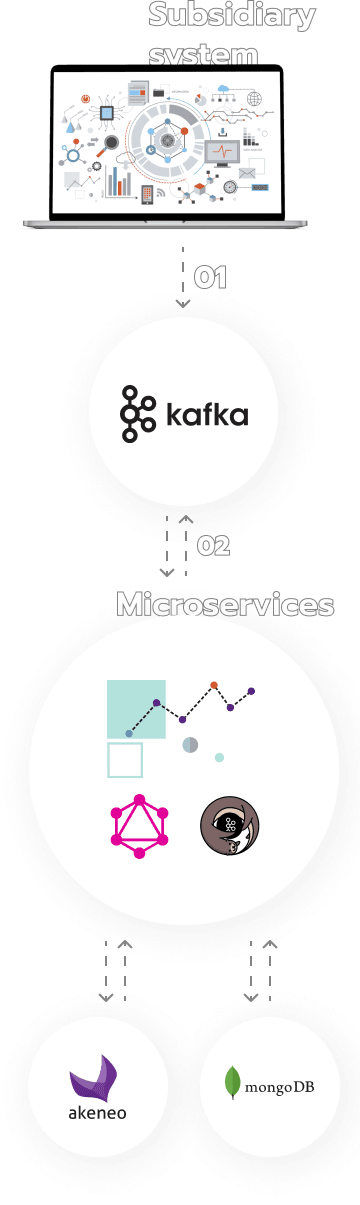

The data flow

So, how does it all work?

Apache Kafka — to transfer data

First, data from subsidiary systems goes into Apache Kafka — a data management tool that distributes the data traffic more evenly over time to avoid overloading and data loss. Data is put in a queue, from where it can be retrieved and processed.

Microservice architecture solves a variety of challenges

Microservices then assess data from different sources and process it. Each microservice has a distinct goal, for example:

Data mapping – uniforming the data from different sources with a Dynamic Data Mapping Engine

Data can start its journey in various formats, most commonly in JSON. To upload the data to Akeneo, first, we need to bring it to Akeneo's standard format. Changing data format is also referred to as data mapping.

Our team created a custom Dynamic Data Mapping Engine – a microservice that processes the data according to instructions, which we described in a separate configuration file.

Creating statistical reports and optimizing resources with Kafka Streams

Information gets into Kafka as a continuous flow of time series data. Apache Kafka Streams is a client library that allows users to aggregate data for a certain period, process it using custom scripts and save the output results. We integrated Kafka Stream to create statistical reports and optimize system resources — whenever several product updates are made within a short timeframe, we only save the last version, decreasing database load and infrastructure costs.

Data filtering and process optimization with GraphQL

Our client's team regularly creates new landing pages for marketing purposes. Moreover, the company allows third-party partner vendors to sell its products. Often such stakeholders do not need all the product details, just several information fields. For their convenience, our team integrated GraphQL. Now, everyone can select and download only the data they need.

Data filtering and process optimization with GraphQL

GraphQL also simplifies and speeds up the data retrieval process. Typically a front end would first send a request to the server, which would then access the database on another server. With GraphQL, the front end can retrieve data directly from the database. It also eliminates the need for an additional server-to-server connection and associated productivity losses.

GraphQL API communicates with servers via an HTTP protocol, ensuring that all connections are short-lived and do not have a significant impact on server productivity.

Data goes to Akeneo for further management

Upon being mapped by one of the microservices, the Dynamic Data Mapping Engine, data is transferred into Akeneo with the help of Akeneo PIM API. Our client employees can see and efficiently manage all the product information here. Akeneo facilitates the workflow of virtually any department, including logistics operations, warehouse management, marketing and sales. Microservices also can access information stored and modified in Akeneo whenever needed.

MongoDB to avoid data loss

The data is also saved in MongoDB. Akeneo has a limit of 20k requests per day. Therefore, data above the threshold might be lost unless saved elsewhere. MongoDB also ensures data security — if something ever happens to Akeneo's servers, our data can be quickly restored. Our team can scale MongoDB as much as needed, making it an efficient backup solution. Microservices can access data stored inMongoDB when necessary.

Infrastructure and deployment optimization

Infrastructure and deployment process optimization allows us to save costs and be more efficient. We used several tools to ensure it:

Lowering microservice management costs

Our client is using Google Cloud Platform infrastructure to process data. Currently, some of the microservices remain idle for most of the time. Our goal for the next three months is to decrease the associated management costs. We will optimize our choice of server types to reduce their total number and the amount of CU we use.

Another part of our strategy is increasing the partition count, meaning more microservices will work simultaneously. We estimate to reduce infrastructure costs by 40%+.

Argo CD for the efficient deployment process

Previously, we used GitLab CI/CD – many tasks had to be done manually and the UI was lacking. To automate and simplify the process, we integrated Argo CD. The tool significantly expands the number of available features. Now, we can deploy twice as often, which means an increase in speed and productivity.

LaunchDarkly – efficient tool for feature management

LaunchDarkly is an efficient tool used by many companies, including RyanAir, Atlassian, NBC, and others. It allows developers to separate a chosen feature from the rest of the code so that it can be easily configured, switched on or off.

Can be used by any member of your team

LaunchDarkly has an admin panel that doesn’t require technical expertise to use. Hence, a faulty feature can be removed by anyone, anytime – no code redeployment is required. It is beneficial if a company doesn’t have a 24/7 tech support team.

Useful for A/B testing

Marketing and development teams can use feature management for A/B testing. Thus, one would create several versions of an element, switch on the first, collect data, activate another version, collect data again and make conclusions.

So, why do many US companies choose Siftkit as their tech partner? Here are sone reason:

Our communication and English skills

We speak classic American English. Not some local version of the language – we speak your language.

Moreover, you can rest assured that we mean exactly what we say. No cultural barriers will prevent us from discussing every project detail freely and openly.

We pay our taxes ourselves

Tax-wise, hiring us is cost-effective. We pay our taxes ourselves, according to local legislation — no need to worry about this aspect.

Moreover, since our taxes contribute to the budget of Ukraine, you would be supporting our country and our determination to preserve democratic values on our land.

Our tech expertise will make your business better

We will let you know if we see an area that can be optimized to save you time, maintenance costs or other resources. Some of our clients were start-ups when we started working with them and now they have multi-million revenue.

We take care of the social benefits of our employees

Our company provides its employees with all the necessary benefits, such as days off, sick leaves, weekends and insurance. You get the final estimate for our work, no hidden or additional expenses involved.

Our time zone

We have a 10 and 7-hour difference with the West Coast and the East Coast, respectively. And when it comes to development, it has many advantages:

Still plenty of time to communicate

Each day we have 2-3 hours of work time overlap with West Coast partners and 4+ hours with East Coast clients – plenty of time to communicate, receive updates and discuss all the necessary project details.

Web and app maintance is happening when your clients are asleep

When we deploy a new product version or make updates, your users will see the standard “We are sorry, the website is under maintenance. Try again in 15 minutes.” page. It is best to do this when most users are sound asleep and there is virtually no traffic.

A part of your 24/7 tech support.

When we deploy a new product version or make updates, your users will see the standard “We are sorry, the website is under maintenance. Try again in 15 minutes.” page. It is best to do this when most users are sound asleep and there is virtually no traffic.

1,5+ years

Of successful cooperation

3 months

To set up data transfer to Akeneo

1 professional

Dedicated to the project development team

Check out our Real Estate Marketplace case study below to learn how our suggestions helped them significantly increase their revenue and survive the COVID-19 crisis when the sales were low.